LONDON (AP) – AI counterfeiting is becoming one of the biggest problems we face online. The rise and misuse of generative artificial intelligence tools has resulted in a proliferation of deceptive photos, videos, and audio.

AI deepfakes depicting everyone from Taylor Swift to Donald Trump are popping up almost every day, making it increasingly difficult to tell what's real and what's not. Video and image generators like DALL-E, Midjourney, and OpenAI's Sora make it easy for people without technical skills to create deepfakes. Just type in your request and the system will spit it out for you.

These fake images may seem harmless. However, they can be used for fraud, identity theft, propaganda and election manipulation.

Here's how to avoid being fooled by deepfakes.

How to spot a deepfake

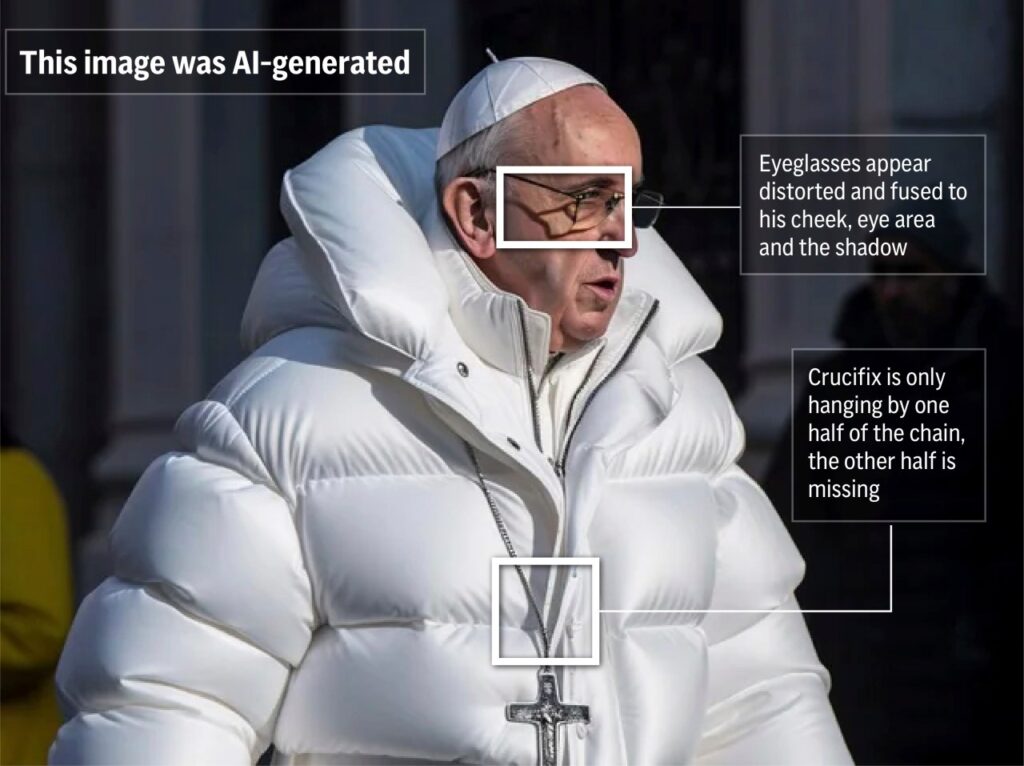

In the early days of deepfakes, the technology was far from perfect and often left obvious signs of manipulation. Fact checkers have pointed out images with obvious errors, such as a hand with six fingers and glasses with lenses of different shapes.

However, as AI advances, it has become much more difficult. Henry Ajder, founder of the consulting firm Latent Space Advisory and a leading expert on generative AI, shares some of his widely shared advice, such as looking for unnatural blinking patterns in people's eyes in deepfake videos. The department said it was no longer valid.

Still, there are some things to look for, he said.

Many of the AI deepfake photos, especially people, have an electronic sheen to them that gives them “a sort of aesthetic smoothing effect” and makes the skin look “incredibly polished,” Ajder said. Ta.

However, he cautioned that creative prompts can eliminate these and many other signs of AI manipulation.

Check the consistency of shadows and lighting. Often the subject is clearly in focus and looks real, but the background elements may not be as realistic or sophisticated.

look at your face

Face swapping is one of the most common deepfake techniques. Experts advise looking closely at the edges of your face. Does the skin tone of the face match the rest of the head and body? Are the edges of the face sharp or blurry?

If you suspect that the video of the person speaking is doctored, look at their mouth. Do their lip movements match their speech perfectly?

Ajder suggests looking at the teeth. Are they clear or are they blurry and don't match what they look like in reality?

Cybersecurity firm Norton says the lack of individual tooth outlines could be a clue, as the algorithm may not yet be sophisticated enough to generate individual teeth.

Think about the bigger picture

Sometimes context is important. Think for a moment whether what you are seeing is plausible.

The Poynter Journalism website advises that if you witness a public figure doing something that is “exaggerated, unrealistic, or unbelievable,” it could be a deepfake. .

For example, is the Pope really wearing an expensive down jacket as depicted in the infamous fake photo? If so, could additional photos and videos be released by legitimate sources?

Find fakes using AI

Another approach is to use AI to fight AI.

Microsoft has developed an authentication tool that can analyze photos and videos and give you a confidence score on whether they have been manipulated. Chipmaker Intel's FakeCatcher uses an algorithm to analyze the pixels in an image to determine whether the image is real or fake.

There are online tools that claim to sniff out fakes when you upload files or paste links to questionable material. However, some authentication systems, such as Microsoft's authentication system, are only available to selected partners and are not available to the public. Researchers don't want to tip off the bad guys and give them a bigger advantage in the deepfake arms race.

Open access to detection tools can also give people the impression that detection tools are “god-like technologies to which critical thinking can be outsourced,” when they should be aware of their limitations. said Ajdel.

Hurdles to find fakes

That said, artificial intelligence is advancing at breakneck speed, with AI models being trained on internet data to produce increasingly high-quality content with fewer flaws.

That is, there is no guarantee that this advice will still be valid after a year.

Experts say it may even be dangerous to impose the burden of becoming a digital Sherlock on ordinary people. That could give the public a false sense of confidence, as deepfakes become increasingly difficult to spot even for trained eyes.

___

Swenson reported from New York.

___

The Associated Press receives support from several private foundations to enhance our explanatory coverage of elections and democracy. Learn more about AP's Democracy Initiative. AP is solely responsible for all content.

Copyright 2024 Associated Press. All rights reserved. This material may not be published, broadcast, rewritten, or redistributed.