Google's AI research institute, Google DeepMind, has published new research into training AI models that it claims will accelerate both training speed and energy efficiency by an order of magnitude, delivering 13x performance and 10x power efficiency over alternative methods. The new JEST training method comes at a time when debate is heating up about the environmental impact of AI data centers.

DeepMind's technique, called JEST (Joint Example Selection), stands out from traditional AI model training techniques. While typical training techniques focus on individual data points for training and learning, JEST trains based on entire batches. The JEST technique first creates a small AI model that evaluates data quality from a very high-quality source and ranks batches by quality. It then compares the results to a larger, lower-quality set. The small JEST model determines which batches are best for training, and then trains a larger model based on the findings of the small model.

The paper itself, available here , provides a more detailed explanation of the process used in the study and the future of the research.

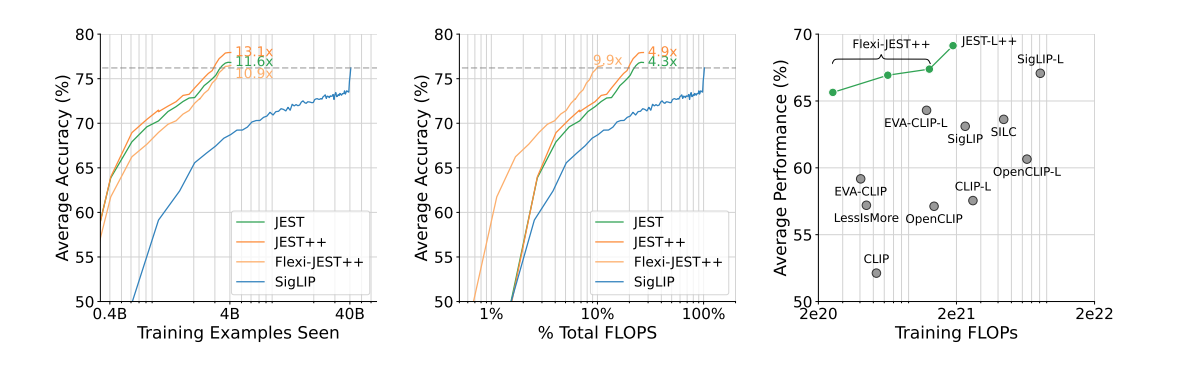

In their paper, DeepMind researchers make it clear that this “ability to direct the data selection process towards a distribution of smaller, well-curated datasets” is crucial to the success of the JEST method. Success is a good word for this research: DeepMind claims that “our approach outperforms state-of-the-art models by requiring up to 13x fewer iterations and 10x fewer computations.”

Of course, the system is entirely dependent on the quality of the training data, and bootstrapping techniques will only work if you have a top-quality, human-curated data set. Nowhere is the adage “garbage in, garbage out” more applicable than with this method of “jumping ahead” in the training process. This makes the JEST method much more difficult for hobbyists and amateur AI developers to achieve than most other methods, as it will likely require expert-level research skills to curate the best initial training data.

JEST's research comes at a time when the tech industry and governments around the world are beginning to discuss artificial intelligence's massive power demands. AI workloads will consume about 4.3 GW in 2023, roughly the annual electricity consumption of the Republic of Cyprus. And things certainly aren't slowing down: a single ChatGPT request costs 10 times more power than a Google search, and Arm's CEO predicts that AI will take over a quarter of the U.S. power grid by 2030.

It remains to be seen if and how the JEST method will be adopted by big players in the AI field. With GPT-4o reportedly costing $100 million to train, and future larger models likely to reach the $1 billion mark soon, companies may be looking for ways to save their wallets in this sector. Hopefuls believe that the JEST method will be used to maintain current training productivity with significantly lower power consumption, which will reduce the cost of AI and help the planet. However, it is far more likely that capital machines will keep their foot on the gas and use the JEST method to keep power consumption at its maximum for lightning-fast training output. Which will win: cost reduction or output scale?