Apple said Friday it would voluntarily implement safeguards for its artificial intelligence (AI), joining other big tech companies including Open AI, Amazon, Google parent Alphabet and Meta in following Biden administration guidelines aimed at minimizing national security risks.

In July 2023, the Biden administration announced it had secured voluntary commitments from seven major AI companies that pledged to “help advance progress toward safety, security, and transparency” of AI technology.

Amazon, Anthropik, Google, Inflexion, Meta, Microsoft, and OpenAI were the first seven companies to endorse the administration's initiative.

Companies are being asked to transparently share the results of tests that measure compliance with security and anti-discrimination regulations.

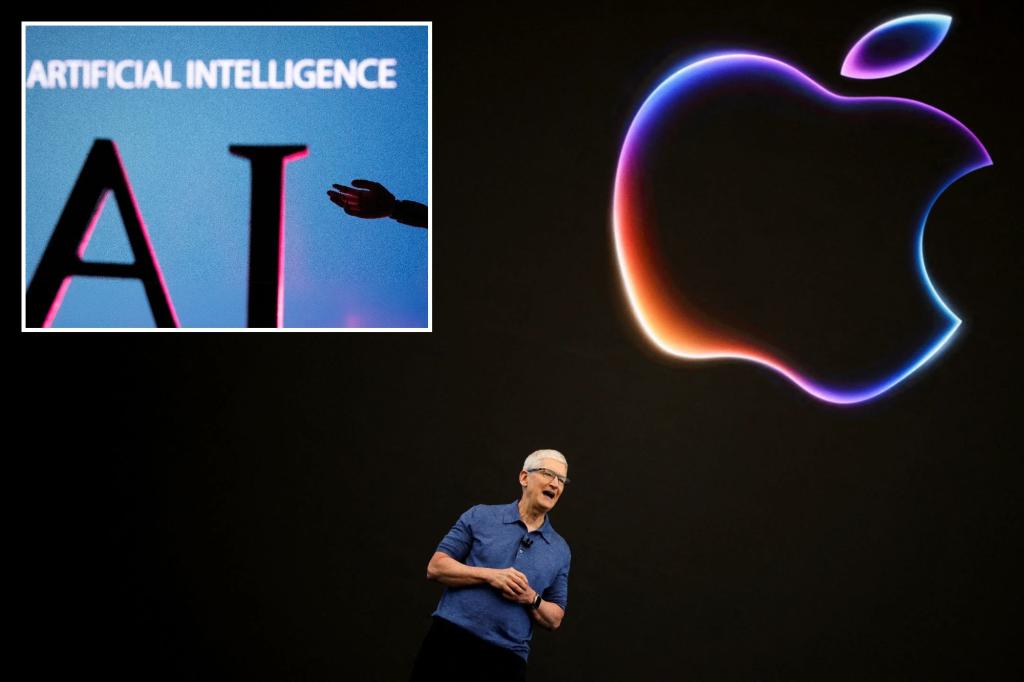

Apple followed suit with its tech rivals last month when it announced it would build AI capabilities into its flagship products including the iPhone, iPad and Mac.

The Cupertino, California-based giant has announced a series of free software updates dubbed “Apple Intelligence” in an effort to catch up with Silicon Valley rivals such as Microsoft and Google, who are leaps and bounds ahead in the AI arms race.

At its annual Worldwide Developers Conference last month, Apple announced that it would use OpenAI's ChatGPT to make its virtual assistant Siri smarter and more helpful.

The optional Siri gateway to ChatGPT will be free for all iPhone users and will be available on other Apple products once the option is built into Apple's next-generation operating system.

ChatGPT subscribers should be able to easily sync their existing accounts when using an iPhone and get access to more advanced features than free users.

Apple's full set of upcoming features will only work on the latest models of iPhones, iPads, and Macs because they require advanced processors in the devices.

For example, to make the most of Apple's AI package, consumers will need to buy last year's iPhone 15 Pro or the next model coming later this year, but all of the tools will also work on any 2020 or newer Mac after the next operating system is installed on that computer.

The rapid advancement of AI technology has sparked debate among technology commentators about the risks it could pose to the economy, national security, and even human survival.

Last month, a group of AI whistleblowers alleged that Google and OpenAI were rushing to develop new technology that was putting humanity at risk.

The open letter, signed by current and former employees of OpenAI, Google DeepMind, and Anthropic, warned that “AI companies have strong financial incentives to avoid effective oversight” and pointed to the lack of federal regulation of the development of advanced AI.

“Companies are racing to develop and deploy ever more powerful artificial intelligence while ignoring the risks and impacts of AI,” Daniel Kokotajiro, one of the letter's organizers and a former OpenAI employee, said in a statement.

“I decided to leave OpenAI because I have lost hope that they will act responsibly, especially in their pursuit of artificial general intelligence.”

Government and private researchers worry that models that summarize information and generate content from vast amounts of text and images could be used by U.S. adversaries to launch offensive cyberattacks or even develop powerful biological weapons.

With post wire