Big tech companies have successfully distracted the world from the existential risks that artificial intelligence still poses to humanity, a leading scientist and AI activist has warned.

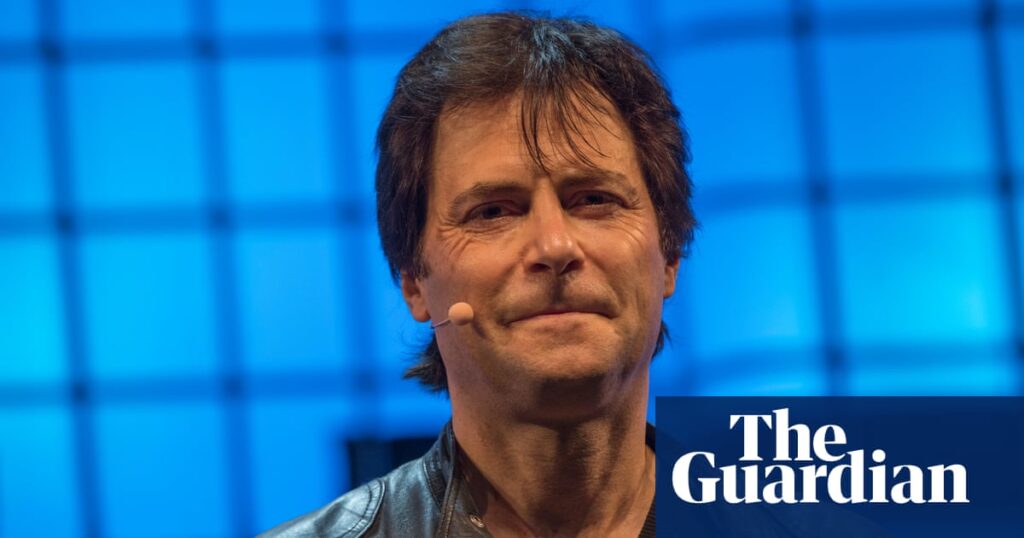

Speaking to The Guardian at the AI Summit in Seoul, South Korea, Max Tegmark said the shift in focus from the end of life to the broader concept of artificial intelligence safety risks unacceptably delaying the imposition of stricter regulations on developers of the most powerful programs.

“In 1942, Enrico Fermi built the world's first self-sustaining nuclear chain reaction reactor under a football stadium in Chicago,” said Tegmark, who was trained as a physicist. “When the leading physicists of the time found out about this, they were absolutely astonished, because they realized that the biggest hurdle remaining to building a nuclear bomb had just been overcome. They realized that this was only a few years away. In fact, it was three years away, with the Trinity test in 1945.”

“An AI model that can pass the Turing test [where someone cannot tell in conversation that they are not speaking to another human] “This is the same warning about AI that could get out of control. That's why people like Geoffrey Hinton and Yoshua Bengio, and at least privately, many tech CEOs, are panicking right now.”

These concerns led Tegmark's nonprofit Future of Life Institute to call for a six-month “pause” on advanced AI research last year, saying OpenAI's release of its GPT-4 model in March of that year was the canary in the coal mine, proving the risks were unacceptably close.

No moratorium was agreed to despite thousands of signatures from experts, including Hinton and Bengio, two of the three AI “godfathers” who pioneered the approach to machine learning that is the foundation of the current field of AI.

Instead, Seoul's second AI summit, following Britain's Bletchley Park one last November, has led the emerging field of AI regulation. “We hoped the letter would legitimize the conversation and we're very happy that it worked,” said the CEO. “When people see that people like Bengio are worried, they think, 'It's OK that I'm worried.' Even the man at my gas station then told me he was worried that AI will replace us.”

“But now we need to move beyond just talking and put action into the process.”

However, since the original Bletchley Park summit announcements, the focus of international AI regulation has shifted away from existential risks.

In Seoul, only one of the three “high-level” groups directly mentioned safety, and that group considered everything from risks “from privacy violations to job market disruption to potentially catastrophic consequences.” Tegmark argues that downplaying the most serious risks is unhealthy, and not coincidental.

“That's exactly what industry lobbying predicted would happen,” he said. “In 1955, the first papers came out saying that smoking causes lung cancer, and you would think some kind of regulation would come immediately. Instead, the industry exerted so much pressure to distract from it that it took until 1980. I feel that's exactly what's happening now.”

After newsletter promotion

“Of course AI is causing harm today. It's biased and it's causing harm to marginalized groups… but [the UK science and technology secretary] As Michelle Donnellan herself puts it, “It's not like we can't do both. It's like saying, 'We're going to have hurricanes this year, so let's not pay attention to climate change, let's just focus on the hurricanes.'”

Tegmark's critics make the same argument about his own claims – that the industry is trying to get everyone talking about hypothetical future risks to distract from concrete present-day damage – but he denies the charge: “Even if you take it at its own value, that's pretty galactic brain-dead. [OpenAI boss] “Sam Altman was trying to avoid regulation by telling everyone that the end may be coming for everyone, and trying to convince people like us to sound the alarm.”

Rather, he argues, lukewarm support from some tech leaders is because “they all feel like they're in an impossible situation where they can't quit even if they wanted to. What would happen if the CEO of a tobacco company woke up one morning and realized what they're doing is wrong? They'd replace the CEO. So the only way to put safety first is for governments to institute safety standards for everyone.”