OpenAI, Google, and other technology companies train their chatbots using vast amounts of data culled from books, Wikipedia articles, news articles, and other sources on the internet. But in the future, I would like to use something called synthetic data.

Technology companies could use up all the high-quality text available on the Internet to develop artificial intelligence. And both companies face copyright lawsuits from authors, news organizations, and computer programmers for using their works without permission. (In one such case, the New York Times reported, he sued OpenAI and Microsoft.)

They believe that synthetic data can help alleviate copyright issues and increase the supply of training materials needed for AI. Here's what you need to know about it:

What is synthetic data?

This is data generated by artificial intelligence.

Does that mean tech companies want AI to train AI?

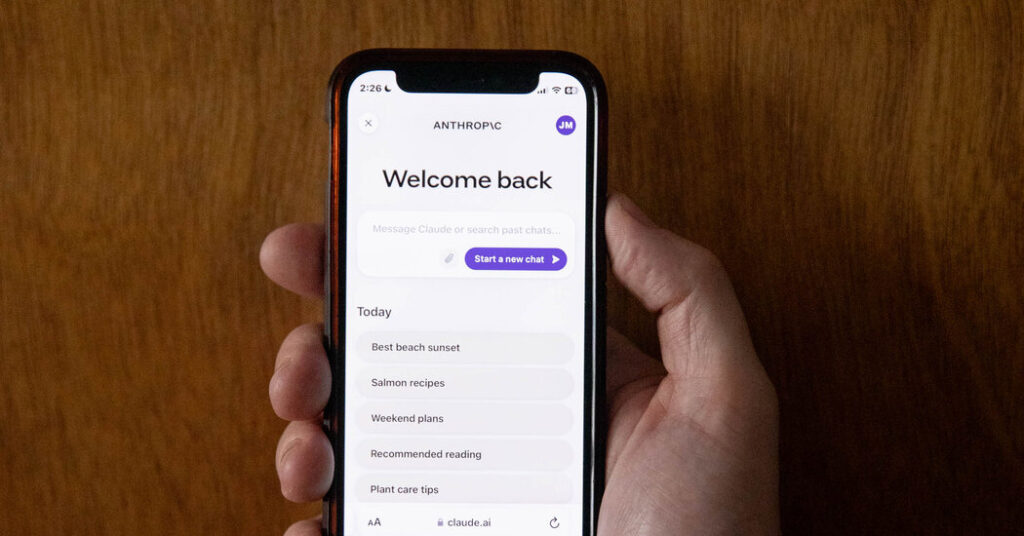

yes. Rather than training AI models on text written by humans, technology companies such as Google, OpenAI, and Anthropic want to train their technology using data generated by other AI models.

Will synthetic data work?

Not exactly. AI models get things wrong or make things up. They also showed that they can detect biases that appear in the internet data they were trained on. Therefore, when companies use AI to train AI, they may end up amplifying their own deficiencies.

Is synthetic data now widely used by technology companies?

No, technology companies are experimenting. However, synthetic data has potential flaws that prevent it from being a large part of how AI systems are built today.

So why are tech companies claiming synthetic data is the future?

The companies believe they can improve the way they create synthetic data. OpenAI and others have been exploring ways in which two different AI models can work together to produce more useful and reliable synthetic data.

One AI model generates the data. A second model then judges the data, just like a human would, deciding whether the data is good, bad, accurate or not. In fact, AI models are better at making decisions than writing text.

“If you give a technology two things, it's very good at choosing which one is best,” said Nathan Lile, CEO of AI startup SynthLabs.

The idea is that this will provide the high-quality data needed to train even better chatbots.

Does this technique work?

In a sense. It all comes down to his second AI model. How well do you judge text?

Anthropic has been the most active in this effort. Fine-tune his second AI model using “configurations” handpicked by the company's researchers. This teaches the model to select texts that support certain principles, such as freedom, equality, and brotherhood, or life, liberty, and personal security. Anthropic’s method is known as “Constitutional AI”

Here's how two AI models can work together to generate synthetic data using an Anthropic-like process.

Still, a human is needed to make sure the second AI model is on track. This limits the amount of synthetic data that can be generated by this process. And researchers are divided on whether methods like Anthropic will continue to improve AI systems.

Can synthetic data help companies avoid using copyrighted information?

The AI models that generate synthetic data are themselves trained on human-generated data, much of which is copyrighted. Therefore, copyright owners can claim that companies like OpenAI and Anthropic used their copyrighted text, images, and videos without permission.

Jeff Clune, a computer science professor at the University of British Columbia who previously worked as a researcher at OpenAI, said AI models could eventually be more powerful than the human brain in some ways. . But they will do so because they learned from the human brain.

“In Newton's words, AI can see further by standing on the shoulders of giant human datasets,” he said.