The race is underway to build artificial general intelligence, a futuristic vision of machines that are as smart as humans, or at least capable of doing as many things as humans.

Achieving such concepts (commonly referred to as AGI) is the driving mission of OpenAI, which developed ChatGPT, and a priority for the elite research arms of tech giants Amazon, Google, Meta, and Microsoft.

This is also a cause for concern for world governments. Leading AI scientists published research Thursday in the journal Science, warning that AI agents with “long-term planning” skills left unchecked could pose an existential threat to humanity. did.

But what exactly is AGI, and how do you know when it's been achieved? Once a cornerstone of computer science, it's now the focus of those trying to make it happen. It has become a buzzword that is constantly being redefined by.

What is AGI?

Not to be confused with the similar-sounding generative AI, which describes the AI systems behind a set of tools that “generate” new documents, images, and audio. Artificial general intelligence is a more vague concept.

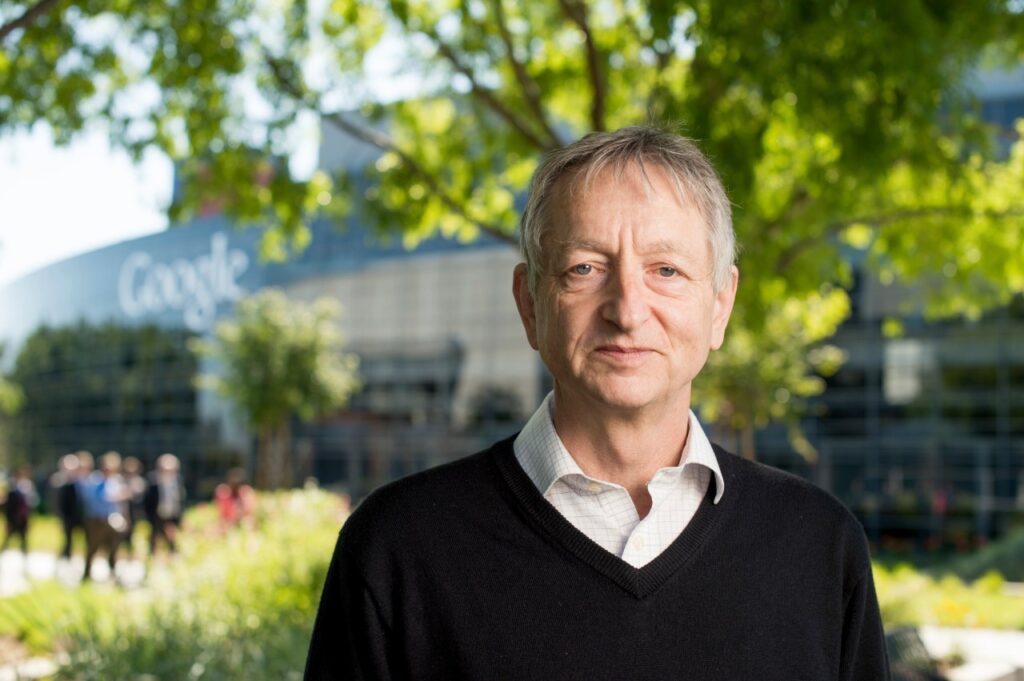

It's not a technical term, but “an ill-defined but serious concept,” said Jeffrey Hinton, a pioneering AI scientist known as the “godfather of AI.”

“I don't think there is a consensus on what that term means,” Hinton said in an email this week. “We use the word to mean his AI is at least as capable as humans in nearly every cognitive function that humans perform.”

Hinton prefers another term, “superintelligence,” which is “AGI that is better than humans.”

A small group of early proponents of the term AGI were trying to recall how mid-20th century computer scientists envisioned intelligent machines. That was before AI research branched out into subfields and developed specialized, commercially viable versions of the technology, from facial recognition to voice-recognition voice assistants like Siri and Alexa. .

Pei Wang, a professor who teaches the AGI course at Temple University and helped organize the first AGI conference in 2008, says that mainstream AI research has “been moving away from the original vision of artificial intelligence, which was initially quite ambitious. “I aimed for it,” he said.

Adding the “G” to AGI was a signal to those who “still want to do big things.” We don't want to build tools. We want to build machines that think,” Wang said.

Are you at AGI yet?

Without a clear definition, it is difficult to know when companies or research groups will achieve artificial general intelligence, or if they have already achieved it.

“Twenty years ago, people would have happily agreed that a system with the capabilities of GPT-4 or[Google's]Gemini had achieved human-equivalent general intelligence,” Hinton said. “More or less, if you could answer any question in a sensible way, you would have passed the test. But now that AI can do that, people want to change the test. .”

Improved “autoregressive” AI techniques that predict the most plausible next word in a sequence, combined with massive computing power to train those systems on mountains of data, create better chatbots. However, they still do not have the AGI that many people can expect. I had it in mind. AGI requires technology that can function like humans at a variety of tasks, including the ability to reason, plan, and learn from experience.

Some researchers hope to find a consensus on how to measure it. This is one of his topics for his first AGI workshop at a major AI research conference next month in Vienna, Austria.

“This really requires effort and attention from the community so that we can mutually agree on some classification of AGI,” said workshop organizer Jiaxuan You, an assistant professor at the University of Illinois at Urbana-Champaign. One idea is to break it down into levels, in the same way automakers try to benchmark the path between cruise control and fully self-driving cars.

Some people plan to take matters into their own hands. San Francisco company OpenAI has given its nonprofit board, whose members include a former U.S. Treasury secretary, the responsibility of determining when its AI systems will reach a point where they will “outperform humans at the most economically valuable tasks.” gave.

OpenAI's own governance structure description states that “the board of directors determines when AGI is achieved.” Such an outcome would deprive Microsoft, the company's largest partner, of the right to commercialize such a system. That's because the terms of the agreement between the two companies “apply only to pre-AGI technology.”

Is AGI dangerous?

Hinton made global headlines last year when he left Google and warned of the existential threat to AI. A new scientific study released Thursday could reinforce those concerns.

The lead author is Michael Cohen, a researcher at the University of California, Berkeley, who discusses “the expected behavior of intelligent artificial agents in general'' and specifically “represents real threats to us by outwitting us.'' We are researching artificial agents capable of providing sufficient

Cohen clarified in an interview Thursday that such a long-term AI planning agent does not yet exist. But it “could be” as tech companies look to combine today's chatbot technology with more careful planning skills using a technique known as reinforcement learning.

“Giving advanced AI systems the goal of maximizing reward, and withholding reward at some point, gives the system a strong incentive to kick humans out of the loop when the opportunity arises,” the joint paper states. ing. Authors include renowned AI scientists Yoshua Bengio and Stuart Russell, and law professor and former OpenAI advisor Gillian Hadfield.

“I hope we were able to make the case that government officials have decided to start thinking seriously about what regulations are needed to address this issue,” Cohen said. For now, “all the government knows is what these companies are trying to tell the government.”

Is it too justified to quit AGI?

With so much money being poured into the promise of advances in AI, it's no wonder AGI has become a corporate buzzword, sometimes sparking religious fervor.

Parts of the technology industry are divided between those who argue that development should proceed slowly and carefully and those who declare themselves to be part of the “accelerationist” camp, such as venture capitalists and rapper MC Hammer. .

DeepMind, a London-based startup founded in 2010 and now part of Google, was one of the first companies to explicitly set out to develop AGI. OpenAI later did something similar in 2015 with a commitment to safety.

But now it may seem like everyone else is jumping on the bandwagon. Google co-founder Sergey Brin was recently spotted hanging out at a California venue called AGI House. And less than three years after he changed his company name from Facebook to focus on virtual worlds, Meta Platforms revealed in January that AGI is also a top priority.

Meta CEO Mark Zuckerberg said his company's long-term goal is to “build complete general intelligence,” which requires advances in reasoning, planning, coding and other cognitive abilities. Researchers at Zuckerberg's company have long focused on these topics, but what caught Zuckerberg's attention was a change in tone.

At Amazon, one sign of new messaging was when the chief scientist for voice assistant Alexa changed his title to become chief scientist for AGI.

While less tangible to Wall Street than generative AI, broadcasting AGI's ambitions could help recruit AI talent who can choose where they want to work.

According to Yu, a researcher at the University of Illinois, when it comes to choosing between a “traditional AI lab” and a lab whose “goal is to build AGI” and has sufficient resources to do so, most people choose the latter. He says he will choose.